Try Chatgpt: One Question You do not Wish to Ask Anymore

페이지 정보

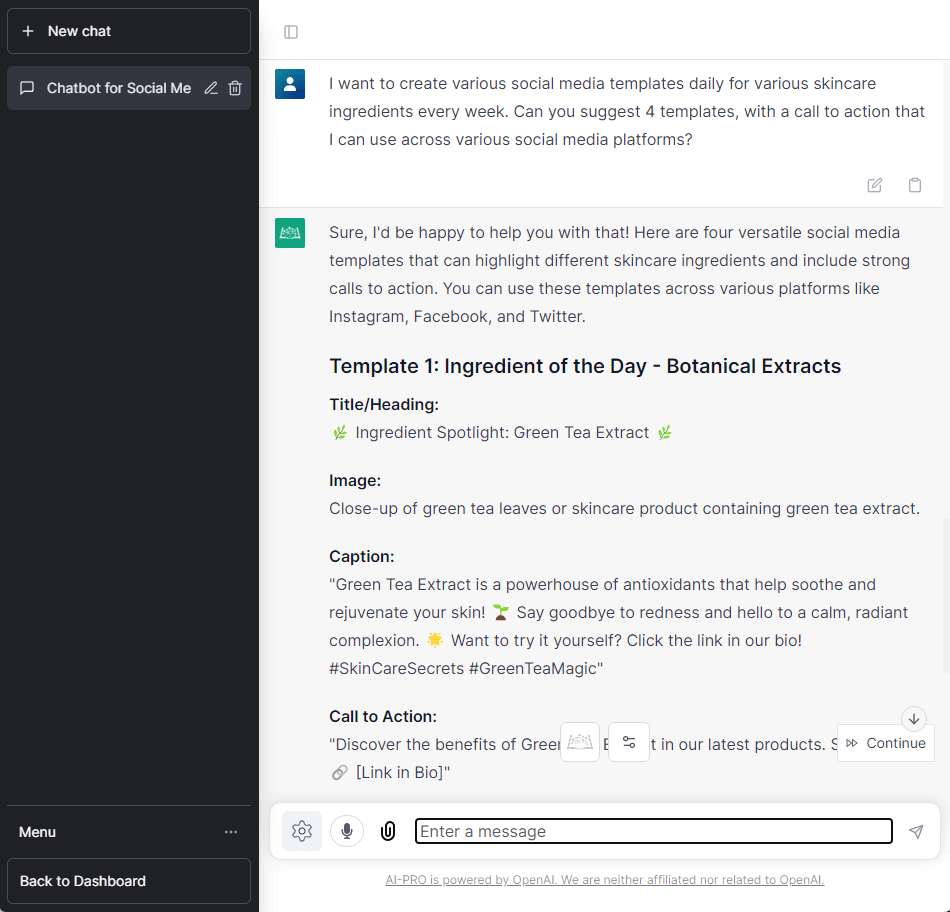

I have just lately posted in regards to the convergence of LLMs - a development of having a number of clusters of models of comparable sizes that converge on sure baseline across evals. With that many document-breaking evals all year long they must have accumulated and the breakthrough should be obvious in the merchandise everyone makes use of every day! Some draw a bleak picture for the massive-tech business that hasn't found out yet how you can make useful and economically sustainable Gen AI products. If you ever want help or guidance, be at liberty to achieve out. As at all times, if you feel prefer it, I'm curious to hear your ideas! If you're like me, you are focused on Gen AI and intently follow the events within the trade, just be cautious with all those heavy claims and breakthroughs you come throughout each day. I discover Gen AI thrilling and captivating! I discover that to be a refreshing quantity of transparency from a search engine. But, with open source AI instruments, governments and organizations got transparency and control over how their knowledge was being processed and secured.

I have just lately posted in regards to the convergence of LLMs - a development of having a number of clusters of models of comparable sizes that converge on sure baseline across evals. With that many document-breaking evals all year long they must have accumulated and the breakthrough should be obvious in the merchandise everyone makes use of every day! Some draw a bleak picture for the massive-tech business that hasn't found out yet how you can make useful and economically sustainable Gen AI products. If you ever want help or guidance, be at liberty to achieve out. As at all times, if you feel prefer it, I'm curious to hear your ideas! If you're like me, you are focused on Gen AI and intently follow the events within the trade, just be cautious with all those heavy claims and breakthroughs you come throughout each day. I discover Gen AI thrilling and captivating! I discover that to be a refreshing quantity of transparency from a search engine. But, with open source AI instruments, governments and organizations got transparency and control over how their knowledge was being processed and secured.

This highlights a possible lack of diverse positive-tuning information being employed by the open supply neighborhood and the need for optimizing fashions for a broader set of code-related duties. The very best part is that you do not must be taught GritQL to make use of Grit. Please use your finest judgement when chatting. ChatGPT isn’t only for chatting! Similar to chatting with newer models and tackling coding duties with AI assistants. As he points on the market's now a free, open-weight, 7B mannequin beating a monstrous 1.7T LLM by OpenAI, in coding! Feeling lonely isn’t nearly feeling sad or omitted. At Middleware, we're practically open supply campaigners, so we have rolled out our own stellar open source DORA Metrics! There are circumstances where gpt chat try performs better at knowledge presentation however lacks behind LLAMA 3.1 in accuracy and there have been cases like the DORA rating where GPT was in a position to do the math better.

Both LLAMA 3.1 and GPT4o are tremendous capable of deriving inferences from processed information and making Middleware’s DORA metrics more actionable and digestible for engineering leaders, resulting in extra efficient groups. Our earlier experimentation with older LLAMA fashions led us to believe that GPT is approach forward, however the recent LLAMA 3.1 405B model is at par with the GPT4o. Added UI User to add token, choose a mannequin and generate AI summary. Added APIs for AI abstract for all four key developments. Enable customers to repeat abstract. I wrote this article, and I've the copyright, that's, the precise to say who’s allowed to copy it. Next, we define some execution settings that tell the Kernel it's allowed to robotically name capabilities we offer (extra on this later). If you employ an open-supply AI to construct this predictive model, you get the authority to overview the codes thoroughly, you'll be able to test if the default settings are skewing predictions, search for any hidden errors or biases, and construct an app that is thorough, accurate, and most significantly, unbiased. So, if you are a developer with some clever methods and abilities up your sleeve that could make a distinction in a new expertise then open source is your thing.

Particularly, the fashions are separated into two clusters depicted by the inexperienced and red shaded area in the best scatterplot. The models within the inexperienced area carry out similarly on HumanEval and LCB-Easy, whereas the fashions in the red area carry out effectively on HumanEval but lag behind on LCB-Easy. Similar to everyone deserves the necessities of life, like meals, clothing, and shelter, everybody has the proper to the world's chopping-edge applied sciences as effectively. This swap enabled CERN to course of and analyze giant datasets effectively, saving on software program licensing charges and making certain steady integration of latest applied sciences. We use Fireworks AI APIs for large langauge fashions. Data from these fashions is based on their training from terabytes of web content material. Layer normalization ensures the mannequin remains stable during training by normalizing the output of each layer to have a imply of 0 and variance of 1. This helps smooth learning, making the model much less sensitive to changes in weight updates during backpropagation. Knowing these photos are actual helps build belief with your viewers.

Should you loved this post and you would like to receive more information with regards to trychatpgt kindly visit our own page.

- 이전글What's The Current Job Market For Dewalt Cheap Tools For Sale Professionals Like? 25.02.12

- 다음글Matadorbet Casino'da Servet Dalgasının Fısıldayan Söğütleri 25.02.12

댓글목록

등록된 댓글이 없습니다.